bagging machine learning explained

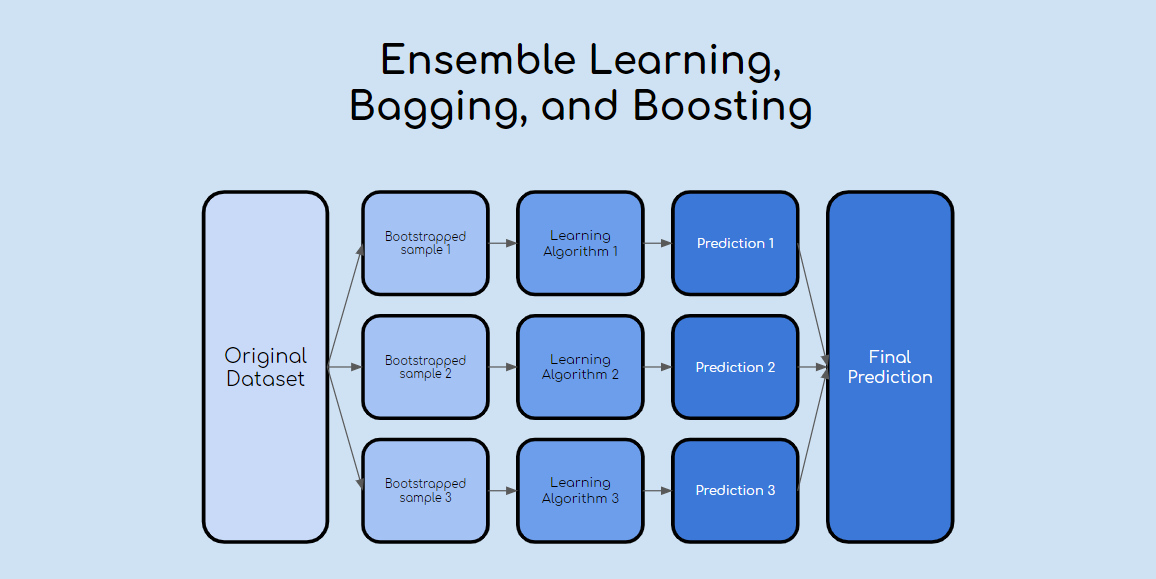

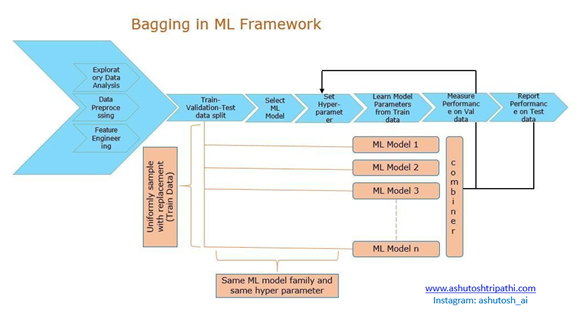

Bagging from bootstrap aggregating a machine learning ensemble meta-algorithm meant to increase the stability and accuracy of machine. B ootstrap A ggregating also known as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms.

Bagging Bootstrap Aggregation Overview How It Works Advantages

Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

. Bagging which is also known as bootstrap aggregating sits on top of the majority voting principle. The bagging technique is useful for both regression and statistical classification. Ensemble Learning Explained Part 1 By Vignesh Madanan Medium Lets assume we have a sample dataset of 1000.

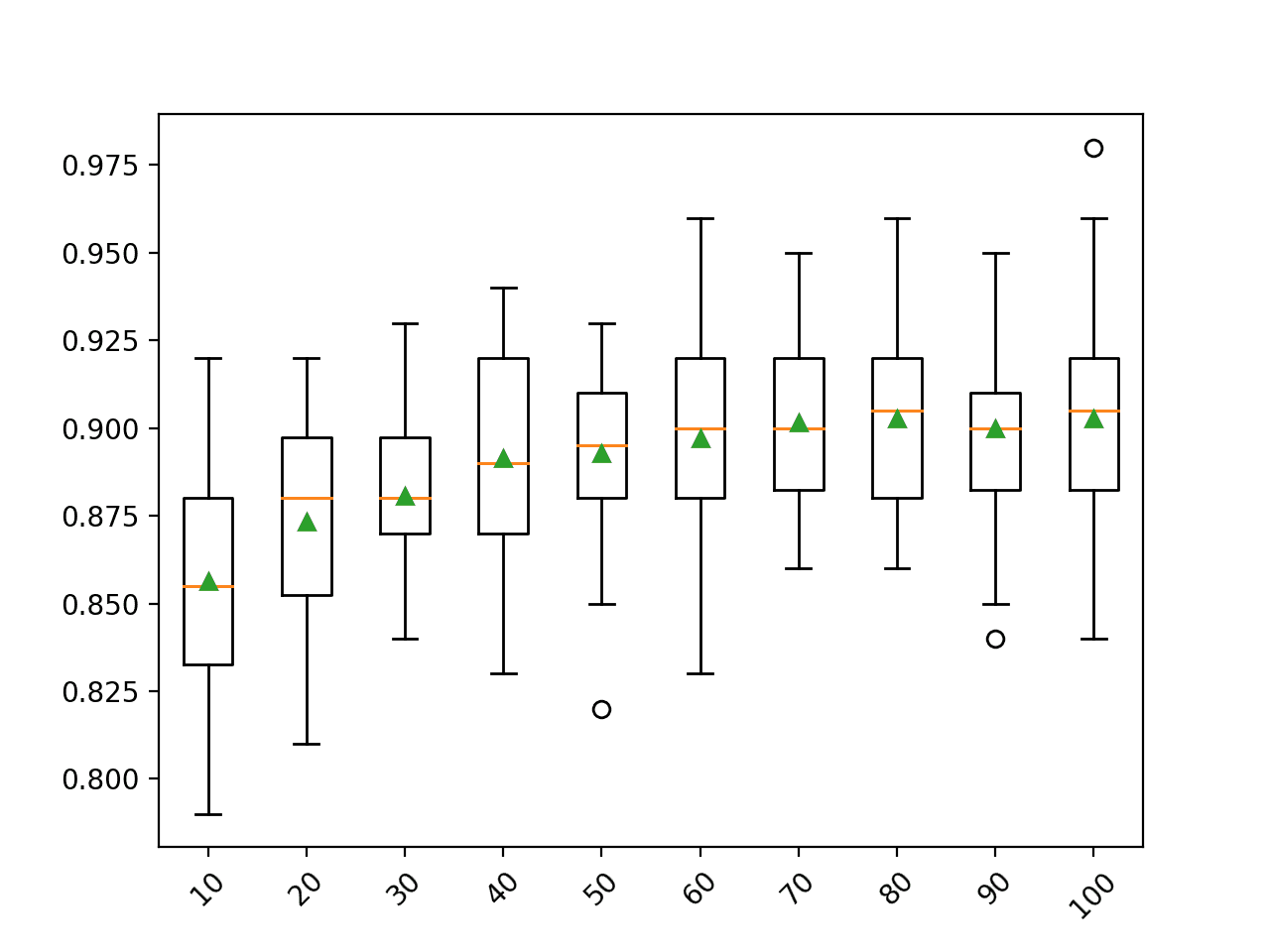

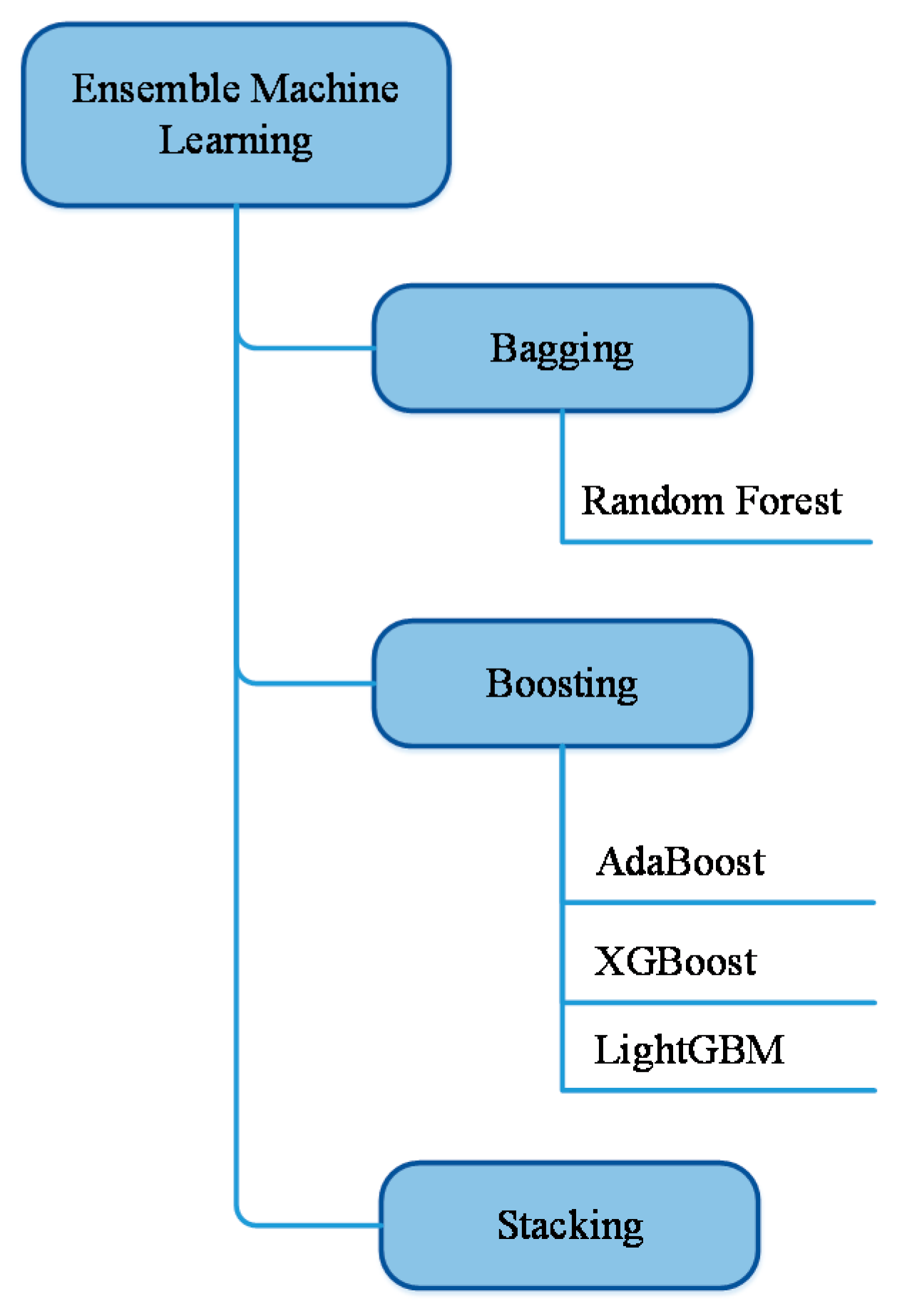

Bagging aims to improve the accuracy and performance of machine learning algorithms. Ensemble machine learning can be mainly categorized into bagging and boosting. It does this by taking random subsets of an original dataset with replacement and fits either a.

So before understanding Bagging and Boosting lets have an idea of what is ensemble Learning. It is the technique to. Decision trees have a lot of similarity and co-relation in their predictions.

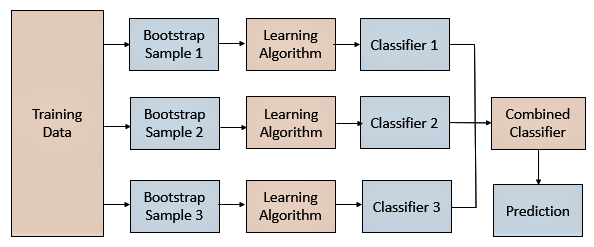

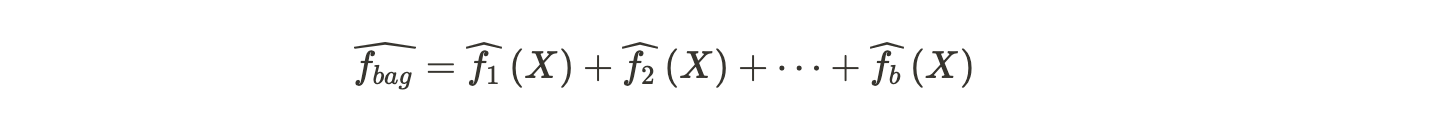

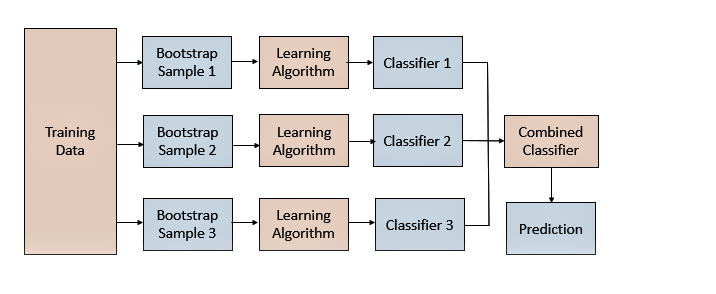

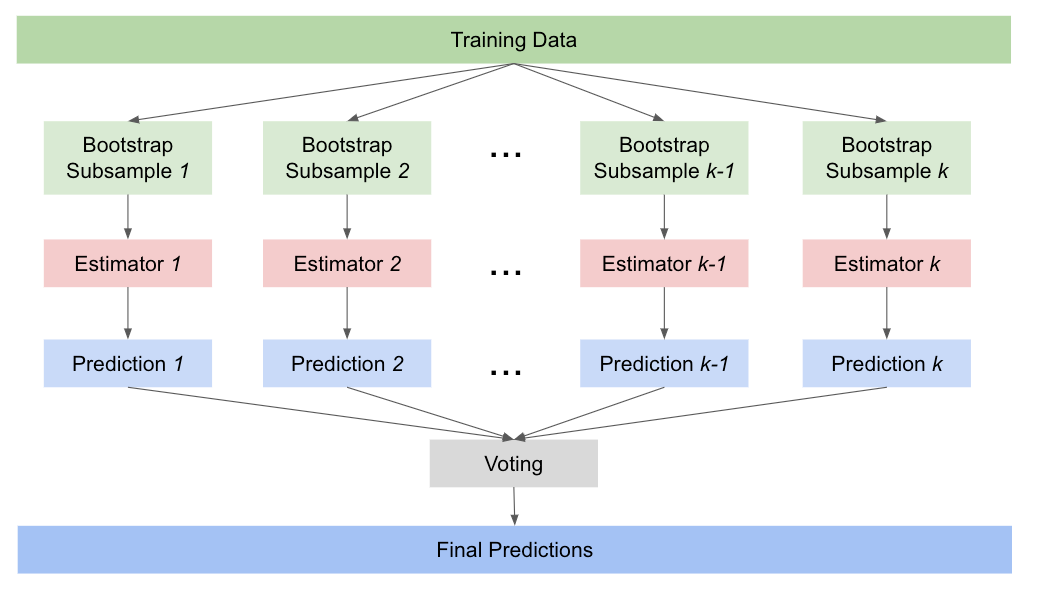

Bagging is a method of. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. Bagging consists in fitting several base models on different bootstrap samples and build an ensemble model that average the results of these weak learners.

Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees. In bagging a random. Lets assume we have a sample.

The samples are bootstrapped each time when the model is trained. Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model. In bagging a random sample.

Bagging also known as bootstrap aggregating is the process in which multiple models of the same learning algorithm are trained with bootstrapped samples of the original. Bagging and Boosting are the two popular Ensemble Methods. By joseph May 1 2022.

Bagging is a parallel method that fits different considered.

Adaboost Adaboost Explained Introduction To Ensemble Learning

A Practical Tutorial On Bagging And Boosting Based Ensembles For Machine Learning Algorithms Software Tools Performance Study Practical Perspectives And Opportunities Sciencedirect

Bagging Vs Boosting In Machine Learning Geeksforgeeks

What Is The Difference Between Bagging And Boosting Quantdare

Introduction To Bagging And Ensemble Methods Paperspace Blog

Bagging And Random Forest Ensemble Algorithms For Machine Learning

Introduction To Bagging And Ensemble Methods Paperspace Blog

What Is Bagging Vs Boosting In Machine Learning

Common Ensemble Learning Methods A Bagging B Boosting C Stacking Download Scientific Diagram

Mathematics Free Full Text A Comparative Performance Assessment Of Ensemble Learning For Credit Scoring Html

Random Forest An Overview Sciencedirect Topics

What Is Bagging In Ensemble Learning Data Science Duniya

What Is Bagging In Machine Learning Ensemble Learning Youtube

What Is Bagging In Machine Learning And How To Perform Bagging

Ensemble Techniques Bagging Bootstrap Aggregating By Bhanwar Saini Datadriveninvestor

Bagging Explained For Beginners Ensemble Learning Youtube

The Schematic Illustration Of The Bagging Ensemble Machine Learning Download Scientific Diagram

Ensemble Methods Explained In Plain English Bagging Towards Ai